pytorch自动求导

科比一路走好,愿曼巴精神永存!

具体用法参照官方文档

1 | import torch |

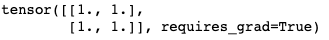

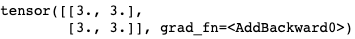

1 | # 针对张量进行操作 |

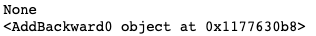

1 | # Tensor 和 Function 互相连接并构建一个非循环图,它保存整个完整的计算过程的历史信息 |

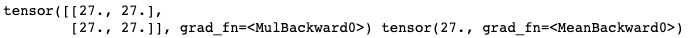

1 | # 对 y 做更多的操作 |

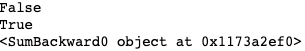

1 | # .requires_grad( ... ) 会改变张量的 requires_grad 标记。输入的标记默认为 False ,如果没有提供相应的参数。 |

1 | # out.backward(y) 后向传播 |

1 | # 停止对跟踪历史中张量求导 |

注意 1. 求导只对用户定义的变量进行,即对各个leaf Variable计算梯度 2. 运算结果变量的“requires_grad”是不可以更改的,且不会改变

注意 1. 求导只对用户定义的变量进行,即对各个leaf Variable计算梯度 2. 运算结果变量的“requires_grad”是不可以更改的,且不会改变